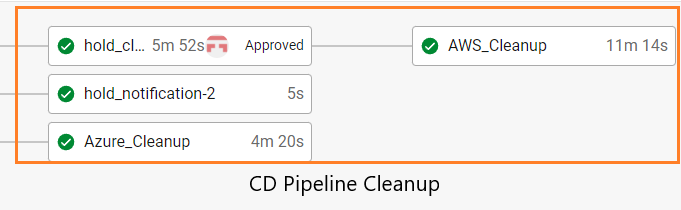

Cleanup

The goal of this segment of the pipeline is to clean up all of the resources running on the cloud. This would usually not be done in a pipeline, but it was implemented as a cost saving measure.

Steps

Hold

This is a hold that allows the administrator to determine if the production version should be left up or taken down at the end of the pipeline. It sends a notification to a discord server to alert the administrator to the hold.

Azure_Cleanup

Azure_Cleanup:

docker:

- image: dudesm00thie/kubeaz:latest

user: root

steps:

- checkout

- attach_workspace:

at: /root

- run:

name: Login to Azure

command: |

az login --service-principal --username "${AZURE_CLIENT_ID}" --password "${AZURE_CLIENT_SECRET}" --tenant "${AZURE_TENANT_ID}"

- run:

name: Delete Kubernetes Cluster

command: |

az aks delete --resource-group buypotatoResourceGroup --name buypotatoStaging --yes

This step is executed after the pipeline has checked that the production is up and working. The reason that it is not done immediately after the staging tests are complete is in case the production version does not work, staging can be used temporarily while production is being fixed. This step logs in to azure and deletes the kubernetes cluster that staging is being run in.

AWS_Cleanup

AWS_Cleanup:

docker:

- image: dudesm00thie/kubeeks

steps:

- checkout

- attach_workspace:

at: /root

- run:

name: Login to AWS

command: |

aws configure set aws_access_key_id "${AWS_ACCESS_KEY}"

aws configure set aws_secret_access_key "${AWS_SECRET_KEY}"

aws configure set default.region us-east-1

aws configure set default.output json

- run:

name: cleanup AWS

command: |

export POLICY_ARN=$(aws iam list-policies \

--query 'Policies[?PolicyName==`AllowExternalDNSUpdates`].Arn' --output text)

export EKS_CLUSTER_NAME="buypotatoprod"

export EKS_CLUSTER_REGION="us-east-1"

export KUBECONFIG="$HOME/.kube/${EKS_CLUSTER_NAME}-${EKS_CLUSTER_REGION}.yaml"

export AWS_PAGER=""

eksctl delete cluster --name $EKS_CLUSTER_NAME --region $EKS_CLUSTER_REGION

aws cloudformation delete-stack --stack-name eksctl-buypotatoprod-cluster

export AWS_PAGER=""

This step is responsible for taking down the stacks that are involved in the production version. Eksctl creates stacks to manage the resources that it creates, so it is necessary to use eksctl to take down the production version.