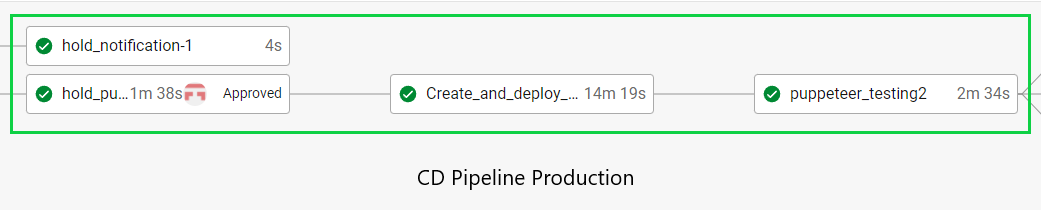

Production

Input: This segment of the pipeline requires the administrator to approve a hold to deploy the application. This hold is created after the test is run in the staging segment of the pipeline which ensures that the application is functioning. This segment also requires docker images of the application and AWS configurations to be completed.

Output: The output of this segment of the pipeline is the functional production version accessible at frontend.prod.wadestern.com. The pipeline also runs the same tests as the staging segment to ensure that the application is working on AWS.

Steps

Hold

The hold step has 2 parts. One pauses the pipeline until an administrator gives it permission to continue. The other part sends a message to a discord server notifying the members that there is a hold in the pipeline. The purpose of this hold is to let administrators decide if they want to deploy to production.

Create_and_deploy_prod

Create_and_deploy_prod:

docker:

- image: dudesm00thie/kubeeks

steps:

- checkout

- run:

name: Login to AWS

command: |

aws configure set aws_access_key_id "${AWS_ACCESS_KEY}"

aws configure set aws_secret_access_key "${AWS_SECRET_KEY}"

aws configure set default.region us-east-1

aws configure set default.output json

- run:

name: Setup and create cluster

command: |

export POLICY_ARN=$(aws iam list-policies \

--query 'Policies[?PolicyName==`AllowExternalDNSUpdates`].Arn' --output text)

export EKS_CLUSTER_NAME="buypotatoprod"

export EKS_CLUSTER_REGION="us-east-1"

export KUBECONFIG="$HOME/.kube/${EKS_CLUSTER_NAME}-${EKS_CLUSTER_REGION}.yaml"

export AWS_PAGER=""

#create the cluster

eksctl create cluster --name $EKS_CLUSTER_NAME --region $EKS_CLUSTER_REGION

#verify OIDC is supported

aws eks describe-cluster --name $EKS_CLUSTER_NAME \

--query "cluster.identity.oidc.issuer" --output text

#associate OIDC to cluster

eksctl utils associate-iam-oidc-provider \

--cluster $EKS_CLUSTER_NAME --region $EKS_CLUSTER_REGION --approve

#create service account

eksctl create iamserviceaccount \

--region $EKS_CLUSTER_REGION \

--cluster $EKS_CLUSTER_NAME \

--name "external-dns" \

--namespace ${EXTERNALDNS_NS:-"default"} \

--attach-policy-arn $POLICY_ARN \

--approve

#Kubectl statements

#kubectl create namespace default

kubectl create --filename deploy/externaldns-with-rbac.yaml \

--namespace default

#Apply frontend/backend

kubectl apply -f deploy/prod-backend-deploy.yaml

kubectl apply -f deploy/production-frontend-deploy.yaml

This job is responsible for creating the kubernetes cluster in AWS, setting up permissions for the externaldns manager, deploying the DNS manager, and deploying the application frontend and backend. This job starts by getting logged in to the AWS account created for this role. It then sets the environmental variables that are necessary for the rest of the job. Then it creates the kubernetes cluster using eksctl. The pipeline configures and creates the DNS manager and finally applies the frontend and backend. The DNS manager automatically creates DNS records in route 53 when it sees the frontend and backend come up.

Puppeteer_testing2

puppeteer_testing2:

working_directory: ~/repo/testing

docker:

- image: mudbone67/node-pup-chr

environment:

prodorstaging: prod

steps:

- checkout:

path: ~/repo

- run:

name: Update NPM

command: npm install -g npm

- restore_cache:

key: dependency-cache-{{ checksum "package-lock.json" }}

- run:

name: Install Dependencies

command: npm install

- save_cache:

key: dependency-cache-{{ checksum "package-lock.json" }}

paths:

- ./node_modules

- run:

name: Wait

command: sleep 120

- run:

name: Run tests

command: npm test -- --forceExit

This puppeteer test is the same as the test preformed durning staging. Due to the limitations of CircleCi, 2 identical jobs had to be created so that the pipeline would operate in the correct order. This job installs all of the dependencies then has a two minute wait period to allow the DNS records to update before it runs the tests. The tests check that the frontend, backend, and database are all able to communicate.